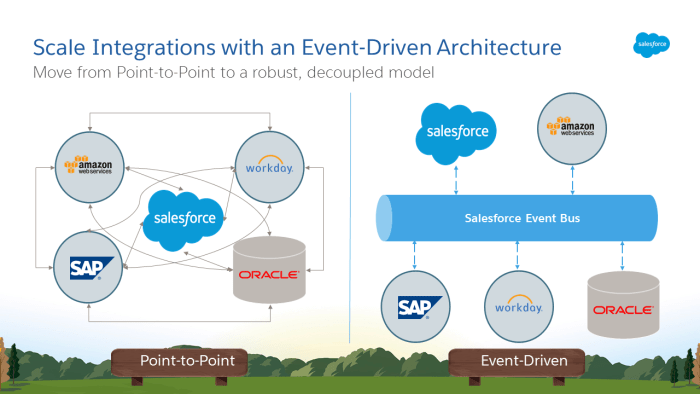

Event-Driven Architecture allows you to create more resilient and scalable integrations for an infinite number of uses-cases. This approach enables us to develop modular and decoupled solutions providing real-time (or near real-time) connections.

As you know, events are notifications messages produced by a system and consumed by clients who have subscribed to them. By adopting events, we can create reusable and efficient integrations keeping the data consistent in multiple systems.

Here are just a few uses cases that can benefit from using this approach:

-

Flight updates: a passenger in an airport wants to receive notifications of changes in his gate or flight departure.

-

Order fulfilment: a customer orders his favourite book with a few clicks. Behind the scenes, multiple systems perform several operations: order is created, payment processed, delivery scheduled, and an email sent confirming the order and details.

-

Data synchronisation: a company wants to keep its customer data synchronised within its internal systems, so all changes in the CRM must be propagated to the underlying systems.

What are Salesforce Streaming Events?

Salesforce offers an Event Bus where our events produced are published and where a client is connected through Streaming APIs. The Event Bus offers a high-efficiency, scalable & durable platform allowing thousands or millions of streaming events daily.

Events are stored in the Bus and depending on the type, their durability can vary between 24 and 72 hours. Past events can be replayed by the clients using a replay ID - a unique event reference produced in the Salesforce Event Bus.

Source: Which Streaming Event Do I Use?

There are 4 types of events in Salesforce:

Push Topic

Push Topic notifications are the type of events sent by Salesforce when the record changes. A SOQL defines how the event will be produced. Using the SOQL, you can define the fields, objects and also conditions when you want the event to be sent. Records can be tracked when they are created, updated, deleted or undeleted.

Generic Event

Generic events are custom notifications you can produce using an arbitrary string as the payload and are not tied to Salesforce data changes. This type of notification can only be explicitly triggered through the Streaming APIs.

Platform Event

Platform events are also a custom notification, but different from the generic, you can define a custom schema specifying the fields and data types you would like to send in the notification.

There are also standard platform events published by Salesforce. These events are mostly related to internal activities in Salesforce, like user and security events.

Change Data Capture Event

Change Data Capture events are triggered when a record changes in Salesforce. Unlike the Push Topic, you don't define a SOQL to produce them. You just need to configure which Object you would like to receive notifications.

Any change in the record will trigger the event, and the message will contain header fields informing what data changed and the new values.

Like in the Push Topic, you can monitor records when they are created, updated, deleted or undeleted.

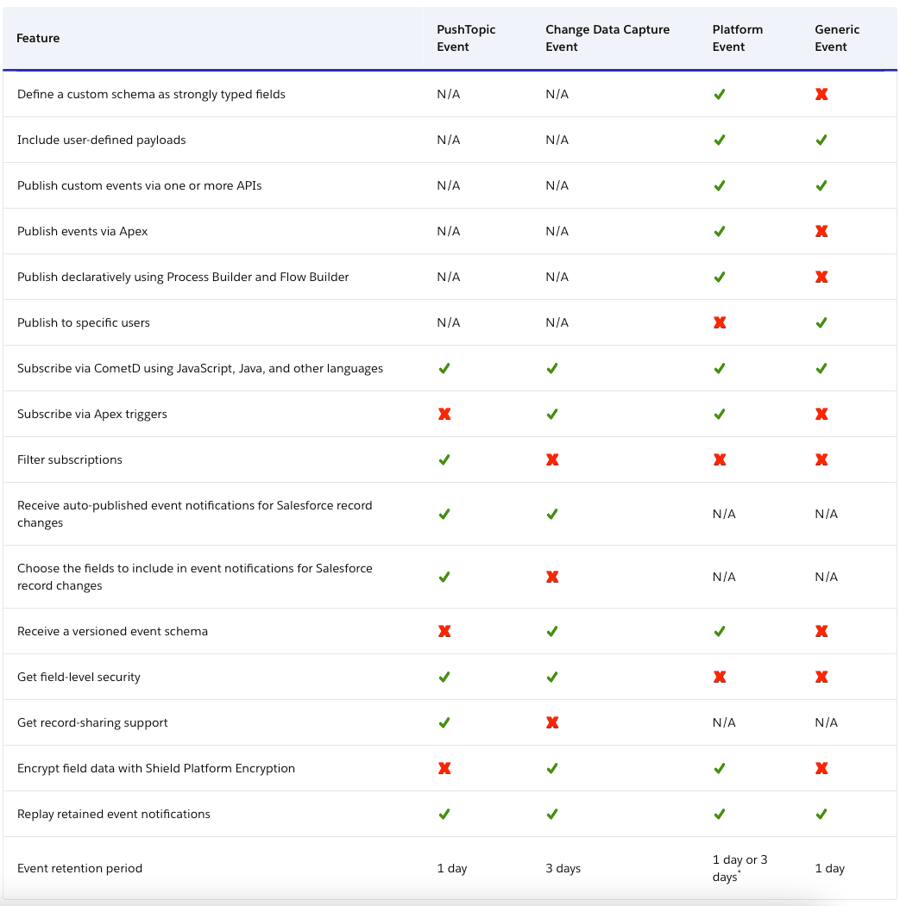

Comparing event features

Source: Streaming Event Features

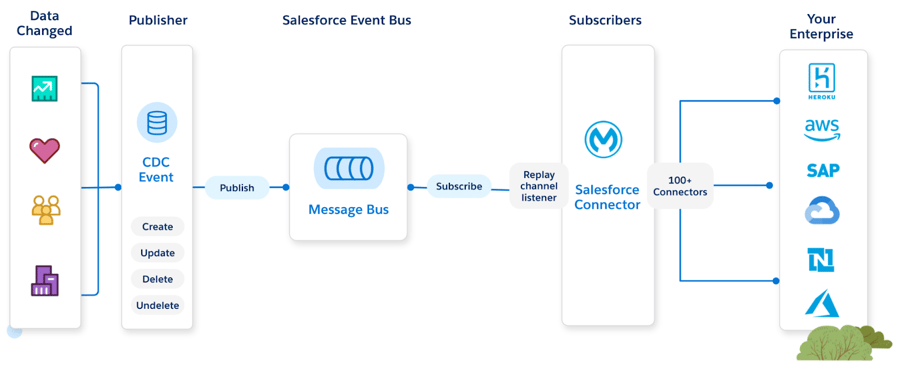

Integrating Salesforce Streaming Events with MuleSoft

MuleSoft is the perfect match for integrating with Salesforce due to its already known powerful capabilities of connecting any system. Using the API-led approach, we can build reusable APIs enabling organisations to deliver and innovate fast.

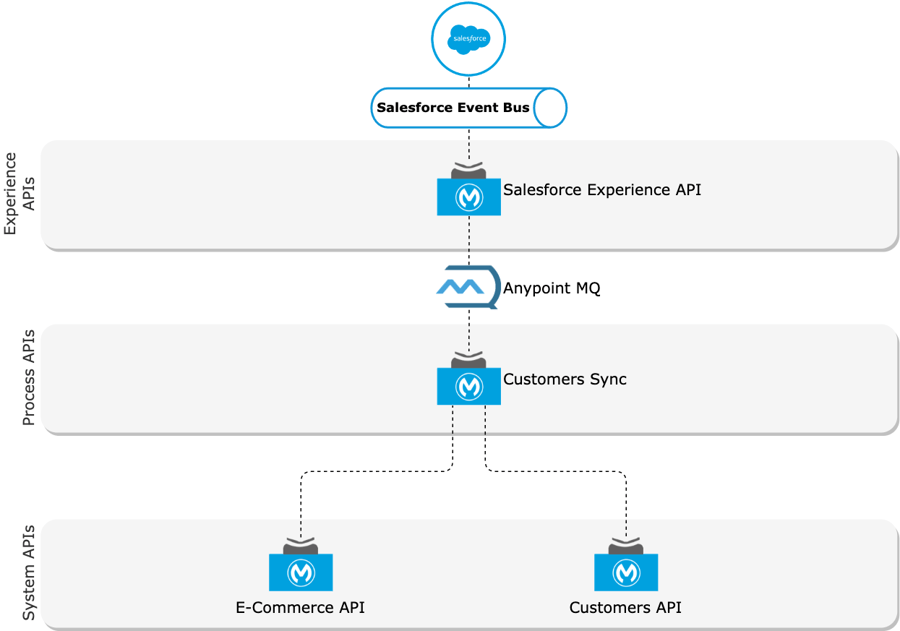

In a simple overview, MuleSoft is connected to the Salesforce Event Bus listening for events triggered by any Salesforce Cloud. Once a new event is produced, MuleSoft will receive it and propagate this event everywhere in our IT landscape.

Source: Subscribe to Change Data Capture Events with the Salesforce Connector

Let's go deeper and understand how this is done under the hood:

-

The event is published by any Salesforce Cloud to the Salesforce Event Bus.

- Every event has a unique reference called Replay Id.

- The Replay Id can be used by the subscribers to retrieve past events from the Message Bus.

- https://developer.salesforce.com/docs/atlas.en-us.238.0.api_streaming.meta/api_streaming/using_streaming_api_durability.htm

-

The Mule App is connected to the Event Bus through the Salesforce Connector, listening for new events.

- The connection is performed by using CometD - Bayeux protocol implementation. This is an HTTP-based protocol for transporting asynchronous messages.

- More info: https://developer.salesforce.com/docs/atlas.en-us.api_streaming.meta/api_streaming/using_streaming_api_client_connection.htm

-

The Salesforce Replay Channel Listener consumes the event from the Message Bus and makes it available to be processed in the Mule Flow.

-

The Replay Id is persisted in an Object Store to be used by the Mule App to retrieve new events. If the application is down for any reason, once recovered, it can consume events from the last one received.

Use Case using the API-led

Using the API-led approach, we can extend the value of each API through reusability. Like in the building blocks analogy, each API connects the other, expanding the number of use cases we can reuse the API.

From an architectural point of view, we can leverage the API-led proposition by decentralising the responsibilities of each API through the layers, decoupling our architecture for a scalable and pluggable purpose.

ACME LLC is a prominent retail company and uses Salesforce Sales & Service Cloud as its main CRM & Customer support platform. Salesforce is being used as their customers’ master data, and they have a challenge in synchronising this data with their systems. Accounts from Salesforce must be synced with the e-commerce platform and Contacts with an internal legacy customer system.

Let's discuss the purpose of each of these APIs:

Experience API

Salesforce Experience API will decouple the logic to connect and listen to notifications within Salesforce Event Bus and allows us to persist and propagate this event through a message broker.

It's important to highlight the importance of the message broker here because it will add reliability to our use case. *See reliability patterns for more information on this

The objective of this API and the broker is to ensure any message will be lost due to failures or downtime in the underlying APIs. With this in mind, this API must receive the event from Salesforce and publish it in a queue as quickly as possible, ensuring this API will have the resources available to continue receiving notifications and keep publishing them to the queue.

Process API

Customer Sync Process API will be responsible for all the logic, orchestration, transformation and enrichment of the customer synchronisation process to the underlying systems. This API will receive the events published in the message broker, transform the data and integrate with the System APIs.

This API also offers resilience for the synchronisation process. In case of failures or connectivity issues with the underlying systems, the message is not accepted and is later redelivered by the broker. We can create different types of fallback patterns like activating a circuit breaker, delaying the redelivery of the message or others to increase the resilience of our process.

System API

E-Commerce & Customers System APIs will encapsulate the complexity of the target systems exposing them as reusable APIs for our IT landscape.

Customers API is encapsulating and exposing a legacy SOAP API through REST APIs. This removes the complexity of connecting with a different protocol, establishes a canonical data model, promotes governance and makes it easy to consume for other APIs.

E-Commerce API connects with an e-commerce platform that offers a REST API. In this case, the system API removes the complexity of default data models, establishes a canonical data model aligned with the business requirements of the e-commerce implementation, and promotes governance and reusability for the IT landscape.

Running the use case

This conceptual use case demonstrates how you can implement an API-led architecture and run it using your Anypoint Platform account.

Pre-requisites

To understand how to connect with Salesforce Streaming APIs, take a look at this tutorial first. It will guide you step-by-step on how to implement a Mule App to subscribe to Change Data Capture Events in Salesforce:

https://developer.mulesoft.com/tutorials-and-howtos/salesforce-connector/subscribe-to-cdc-events/

-

Salesforce Developer Account:

- https://developer.salesforce.com/signup

- User & Security token: https://help.salesforce.com/s/articleView?id=sf.user_security_token.htm&type=5

-

Download the source code of the APIs available here:

- Salesforce Experience API: https://github.com/gui1207/sfdc-customer-sync/tree/master/sfdc-customers-exp-api

- Customer Sync Process API: https://github.com/gui1207/sfdc-customer-sync/tree/master/customers-sync-prc-api

- E-Commerce & Customers System APIs: These are purely conceptual, and we will use a mock to simulate them.

-

Anypoint Platform Account with Anypoint MQ Subscription

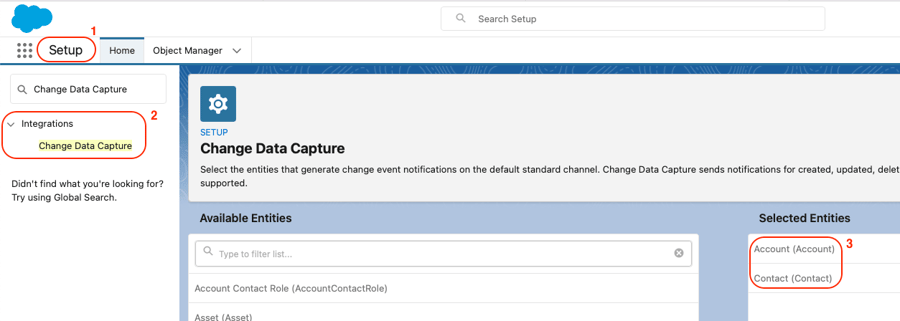

Salesforce - Enabling Notifications

Enable the Change Data Capture events to publish notifications.

Navigate to Setup → Integrations → Change Data Capture → Select Account & Contact objects

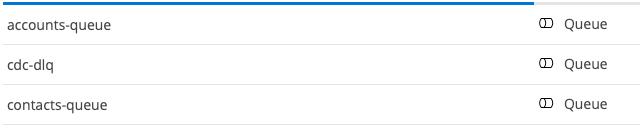

Anypoint MQ - Configuring Queues

Access the MQ menu located in the left navigation bar on the main Anypoint Platform screen.

Create 3 queues:

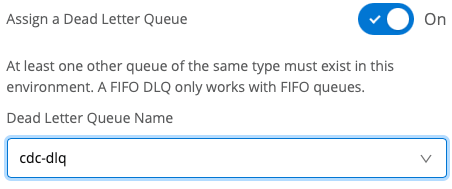

- cdc-dlq: This will be the DLQ where we will send the messages when the failed attempts are exhausted.

- accounts-queue: This queue will handle events for Accounts

- contacts-queue: This queue will handle events for Contacts

For account & contacts queues, enable the "Assign Default Letter Queue" option and select "cdc-dlq".

Creating System APIs mocks

As mentioned, E-Commerce & Customers System APIs are purely conceptual to receive the request from the process API in this example.

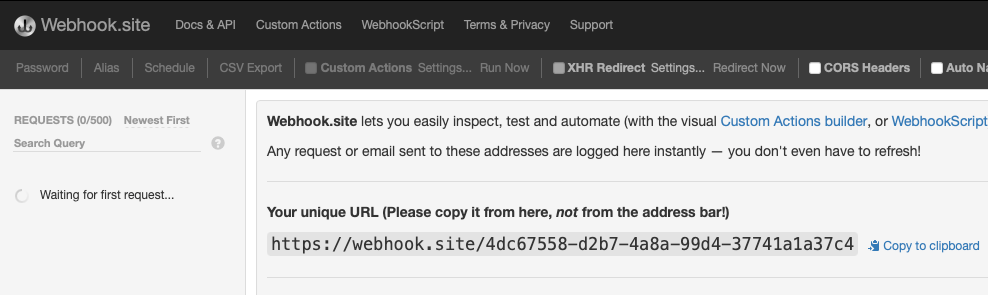

Access Webhook.site to create an endpoint to be used to simulate the System APIs. Using it, you will be able to see the requests arriving in the site interface in real-time and see the message structure.

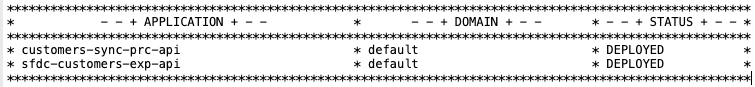

Anypoint Studio - Running the Projects

Import the projects to your Anypoint Studio workspace and modify the following:

Salesforce Experience API

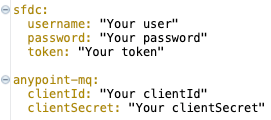

Open the dev-properties.yaml file and update it with your Salesforce and Anypoint MQ credentials.

Customer Sync Process API

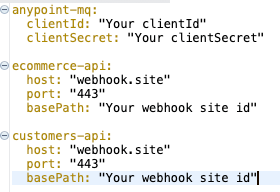

Open the dev-properties.yaml file and update it with your Anypoint MQ credentials and the Webook endpoint details.

*You can create one or two endpoints.

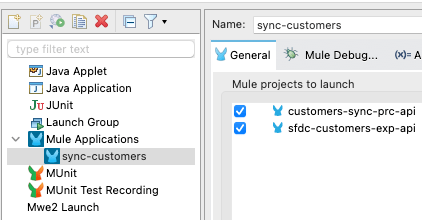

Two run both projects in your Studio, you will need to create a custom configuration:

Go to the main menu and select Run → Run Configurations…

1 - Right-click on Mule Applications and then select New:

2 - Rename the configuration, and select both APIs. Click on Apply and then Run.

Once deployed, you can access the Salesforce, create or change any record of Accounts or Contacts and see the results being consumed by the Mule Experience and Process APIs and transacted with our conceptual System APIs:

Conclusion

Event-Driven architectures will help you to create resiliently and decoupled APIs, enabling you to easily extend your use cases across multiple systems in your organisation.

Salesforce Streaming APIs and MuleSoft API-led Connectivity are a perfect match for this approach. Using the Streaming APIs, you will be able to maximise the usage of resources of your Salesforce organisation and create a robust architecture, allowing you to connect new blocks to your ecosystem easily.

.png)

.png)