In the 'What are microservices (part 3)' we touched different approaches to building a microservices architecture. In this final post of series on microservices, I will discuss those approaches in more details.

Request-Response (Synchronous) and Service Discovery

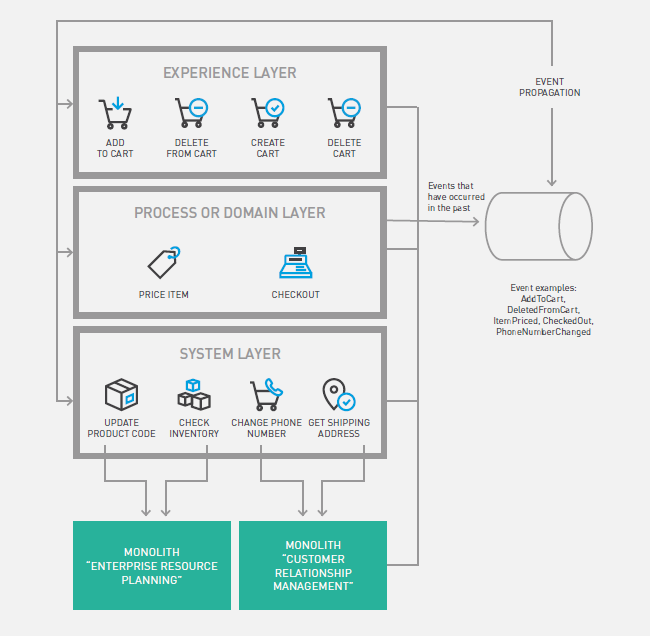

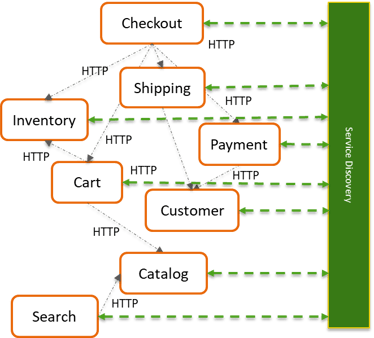

In the examples shown so far in this series, the pattern used is request-response; the services communicate with each other directly by their public HTTP REST APIs. The APIs are formally defined using languages like RAML or Swagger, which are considered the de-facto standard of microservice interface definition and publication.

This pattern is usually adopted in combination with a component called Service Discovery:

Why do we need it? Remember that we are in a distributed context, where the network conditions can change quite frequently, and the services can have dynamically assigned network locations. So, the services need to know how to find each other at all times. A service discovery tool allows to abstract away the physical location of where the services are deployed from the clients consuming them.

When a service is started or shut down, it registers/deregisters itself to the service discovery tool, communicating that it is alive and what is its current location. It also queries the address of all its dependencies, which it needs to call in order to perform its task.

Examples of Service Discovery tools are Spring Netflix Eureka and Consul.

Event-driven (Asynchronous)

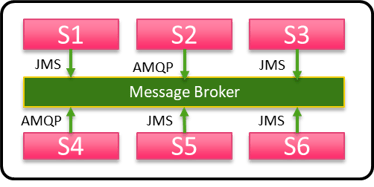

In those cases where microservices collaborate for the realisation of a complex business transaction or process, an event-driven approach is also adopted, which totally decouples the services from each other.

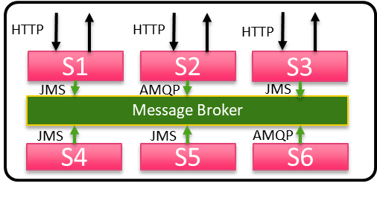

This means that the services don’t need to expose a public API anymore (unless we use a combined approach), as they entirely communicate with each other via events. This is possible only by introducing a new component in the architecture called Message Broker:

The message broker is responsible for delivering messages from producers to consumers running on respective microservices. The key point of the message broker is high availability and reliability; it guarantees that the messages are delivered to the respective consumers, in a reliable fashion. If a consumer is down, messages will be delivered when it comes back online.

Message brokers also provide features such as caching and load balancing. Being asynchronous by nature, they’re easily scalable. Standards like JMS and AMQP are dominant in major broker technologies in the industry.

This component enforces the pattern of choreography; the services collaborate in a choreography asynchronously by firing business events, published to the message broker. No further complex orchestration or transformation takes place, as the complexity of the business logic lies inside the actual microservices.

One of the most popular technologies used for Message Broker at the moment is Apache Kafka.

Composite (Hybrid)

Of course, nothing is preventing us from mixing the two approaches; the composition of microservices is realised with a mix of direct calls through HTTP and indirect calls through a message broker.

Microservices Technologies

As you can imagine, we’re seeing an explosion technologies you can use to implement microservices at the moment. Which one to use really depends on which language, framework or capabilities we expect to use - and this may depend, in turn, on the skills we have in our team, existing products licenses we already have, and so on.

As a general principle, any language or framework which allows us to expose a REST interface, or is able to use messaging protocols (e.g. JMS) is a candidate for implementing a microservice. Remember, one of the main points of adopting this kind of architecture is that technology choices don’t really impact the overall system, so we have total freedom to choose whatever is best for the purpose.

To mention some of the popular microservices oriented frameworks, you may opt for Java ones (Spring Boot & Spring Cloud, Dropwizard, Jersey - the open-source reference implementation of JAX-RS), Node.JS (Express, Sails), Scala (Akka, Play, Spray), Python (Flask, Tornado) and many more.

This is not meant to be an exhaustive list at all, there are countless options you can choose from.

What about the distribution of our microservices? Where are we supposed to deploy them, and how are going to manage them (especially where they start growing in number)?

To answer this question we need to introduce the concepts of Application Container, Container Orchestrator and cloud-based Managed Container Service.

Application Containers

Application Containers are a big topic now, and it’s becoming the preferred way to distribute microservices. I don’t want to go too deep into this topic - you can find plenty of information about how containers work, what are the differences and advantages when compared to the traditional physical/virtual machines. By far, the most popular technology for containers today is Docker, and here you can find the full explanation about what a container is.

All you need to know at this stage is that a container consists of the application plus the bare minimum necessary to execute and support it. A container is meant to be portable across different physical hosts, virtual machines and cloud providers, and across environments; you should be able to run your container in your laptop, on DEV environment or in Production exactly the same way. The only external dependency of a container is the technology needed to run the containers themselves.

Usually the container runs a very lightweight Linux distribution (like TinyCore, CoreOS, Alpine Linux, etc), containing only the bare essential OS libraries the application needs to run.

If you have a look at the adjectives describing a container (lightweight, isolated, portable, etc.) you may understand why this is a perfect match for distributing microservices!

Container Orchestrators

Usually the containers are used in combination with Container Management technologies, also known as Container Orchestrators or Schedulers.

Remember that microservices are meant to be deployed as distributed applications; this means we need to take care of things like high availability, clustering, and load balancing, scaling service instances on the fly, rolling upgrades and taking care of the dependencies and constraints, etc. Luckily for us, this is exactly what these products take care of.

Among the most popular technologies at the moment we can find Kubernetes, Apache Mesos or Docker Swarm).

Managed container services

If you don’t want to worry too much about the underlying infrastructure, you may opt for a managed container service, delegating the operations above to a cloud provider.

All of the main vendors now provide cloud platforms which use all (or many of) the technologies mentioned above in a transparent way for the end user. To mention some of those; Oracle Application Container Cloud Service, Amazon’s AWS Elastic Beanstalk, Google App Engine and Microsoft’s Azure App Service.

In a nutshell, via these platforms we can upload our microservices (in a packaged format, like JAR, WAR or ZIP), specify a very basic configuration (like the command needed to execute the service, the environment variables needed for the application to run, ports to open, etc.) and then, behind the scenes, the platform provisions a new container and deploys the application on it. After the container is started, the full lifecycle of our distributed application can be managed via the platform (load balancing, scaling, starting and stopping the containers, etc).

Conclusion

We’ve finally reached the end of this series!

I tried to give a 360 degree view of this topic without getting too much into the details, which was not really the point of this series.

I’m sure I’ll be back in the future with more microservices related posts, so make sure you subscribe to our updates, otherwise you might miss it!